In a highly secure organization, threat modeling must be an integral part of a Secure Release Process (SRP) for cloud services and software. It is a security practice used to evaluate potential threats to a system or application. Organizations can adopt threat modeling to get ahead of vulnerabilities before it's too late.

The practice involves 6 steps:

1. Identify assets: What needs to be protected? This may include data, hardware, software, networks, or any other resources that are critical to the organization.

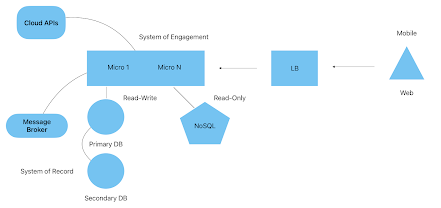

2. Create a data flow diagram: How does data flow through a system or application? What components talk to each other and how? A data flow diagram shows component interactions, ports, and protocols.

3. Identify potential threats: What threats to the system exist? External threats include hackers and malware, while internal threats may include authorized users and human error. What harm can they cause to assets?

4. Risk: evaluate the likelihood and impact of threats. How serious is the threat and how likely is it to happen? What would be the impact to the organization?

5. Prioritize threats: After a risk evaluation, prioritize threats by severity. This helps organizations to focus attention on addressing the most severe threats first.

6. Mitigate threats: The final step in threat modeling is to develop and implement measures to mitigate identified threats. This could include adding security controls, such as firewalls, intrusion detection/prevention systems, or a SIEM. Employee training on security best practices also goes a long way to mitigate threats. Regular tests and updates to security measures are other ways to mitigate security risks.

A review of a threat model should happen at least once a year as part of an SRP to catch new threats and to assess architecture changes to the system or application as it evolves. By identifying potential threats proactively, organizations can significantly reduce the risk of a cybersecurity attack.

Sample Threat Model

In this sample threat model, the focus is a PAM solution deployed deep inside the corporate network in a Blue Zone. In identifying the assets needing protection, the diagram expands out to include all inbound connections into the PAM solution, all outbound connections from it.

|

| Sample Threat Model |

Network Zones

The sample diagram can be broken down by network zone: Blue, Yellow, and Red.

Blue - Highly restricted. Contains mission critical data and systems operating within it. Here applications can talk to each other, but they shouldn't reach out to any of the other zones. If they do, traffic should be carefully monitored.

Yellow - like a DMZ (Demilitarized Zone), this zone hosts a services layer of APIs and user interfaces that are exposed to authenticated/authorized users. It also hosts a SIEM to take logs in from external sources.

Red - This zone is uncontrolled. It is completely un-trusted because of the limited controls that can be put into place there. As such, it's viewed as a major security risk. Sensitive assets inside the organization must be isolated as much as possible from this zone. Could be a customer's network or the big bad internet.

Assets

The A1-A18 labels identify and classify the assets that need to be protected. In this model assets to protect include logs, alert data, credentials, backups, device health metrics, key stores, as well as SIEM data. And since the focus of this threat model is the PAM solution itself, the primary asset are elevated customer device credentials, A4.

Threats

The red labels T1-T5 represent threats to the applications and data inside each zone. In this model, threats do not include external unauthenticated users because the system is locked down to prevent this type of access. But it does include internal authenticated as well as internal unauthenticated users as a threat simply because human error could lead to a security incident. SQL Injection is also identified as a threat to the databases.

Controls

To mitigate threats, controls and safeguards are put in place. In this model, a VPN sits in front of the internal network and VPN access is required to get in. All traffic is routed through an encrypted VPN tunnel. In the diagram this is the dotted line underneath the Red Zone labeled C1. Other controls include firewalls to allow traffic only through specific ports and protocols, as well as encryption of data in transit by SSL/TLS.

An intrusion detection and/or intrusion prevention (IDS/IPS) tool is in place, labelled C7, to capture and analyze traffic. Anything suspicious generates an alert for security personnel to act upon. An IDS can be used to detect a wide range of attacks, including network scans, port scans, denial-of-service (DoS) attacks, malware infections, and unauthorized access attempts. Other controls throughout the diagram are placed there to protect assets from identified threats.

Data Classification

The sample threat model does not have an extensive data classification scheme, as it only identifies sensitive data. But other models could provide a more granular data classification scheme to better explain what kind of data is stored where and how to protect it.

For example, HIPPA (Health Insurance Portability and Accountability Act) protects the privacy and security of individuals' medical records and personal health information (PHI). The law applies to health care providers, health plans, and health care clearinghouses, as well as their business associates, who handle PHI. HIPAA requires covered entities to implement administrative, physical, and technical safeguards to protect the confidentiality, integrity, and availability of PHI. Non-compliance with HIPAA regulations can result in significant fines and legal penalties.

HIPAA, PCI-DSS, and GDPR mandate that organizations implement security measures to protect sensitive data. Threat modeling as a security practice helps organizations to comply with regulatory requirements.

Once it's created, a threat model diagram can be reviewed by the organization's security team and kept current with architectural changes as the system evolves over time. The threat model provides a basis for a re-assessment of the threats and controls in place to protect assets.

An improvement in an organization's security posture can be achieved by investing in threat modeling, and thereby reducing the risk of cyber-attacks.